Would you like to make a Windows PC that has some network-shared folders containing large files look as if there's something horribly wrong with it?

Well, that is, I have just discovered, easy!

Just copy one of the files - let's say they're movies - over the network to another computer.

Then, before the first copy operation is complete, start copying another. And another. And another.

Like, maybe you're copying all of your absolute favourite TV shows and movies, and you're just clicking and dragging whatever catches your eye, and letting the little copy dialogs pile up.

This is exactly the sort of task that a proper file server is specifically designed to handle. Even if the server's storage isn't terribly fast, six people all asking for different things on the same drive at the same time should be put in a queue, rather than asking the waiter to carry every order for the whole restaurant at once, as it were.

But consumer versions of Windows aren't set up to do that. They're meant to serve a single user, and see nothing wrong with just doing exactly what they're told to do if several remote computers - or even just one - ask them to do something like copy 20 large files at once.

(You can probably alleviate at least some of this problem in consumer Windows versions by something like selecting the "background services" and "system cache" options in WinXP's Performance Options -> Advanced tab. Even a cheap Solid State Drive would immensely reduce the problem, too, since the multi-millisecond seek time of mechanical hard drives is a big reason why it happens, and SSDs have near-zero seek time. But SSDs are, of course, still rather too expensive per gigabyte for tasks like bulk video storage.)

Once you've got several large copy operations all happening at once on, let's say as a random example, the Windows XP computer on which I'm writing this, this "target" computer will be flogging to death the drive(s) on which the big files reside. Data to and from those drives probably has a bottleneck or two of its own before it gets to the network, too; the ATA I/O hardware in consumer PCs is not made to deal elegantly with several drives all talking at once.

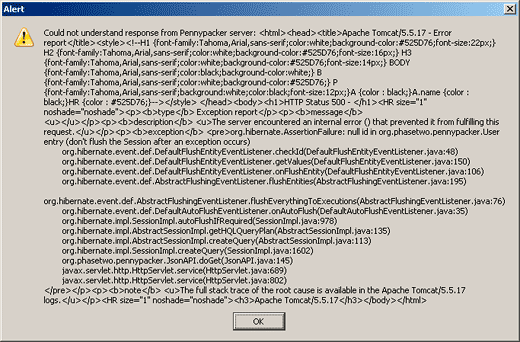

The upshot of this is that any operation which expects one of those drives to respond snappily to a request - or, quite possibly, which is just trying to talk to some other drive in the computer - will now suddenly find itself waiting a lot longer for that response. And that's one of the standard ways in which programs can misbehave. It's perfectly normal for programs to, say, ignore user input while they're saving a ten-kilobyte file; that should only take a moment. But if it now takes 30 seconds for that tiny file to be saved, as the save operation tries to push through a storm of seeking and reading, the program will appear to have hung. This applies to lots of things besides saving files - if modal windows suddenly take 30 seconds to appear, for instance, the program will seem just as broken.

On the plus side, as soon as you cancel the 20 simultaneous copy operations, the target PC will immediately start working perfectly again.

On the minus side, before you cancel the copies, it'll be doing a very good imitation of a computer with one or more failing hard drives. And practically anything you do to try to figure out what's going on will just add more input/output tasks to the mess, and make things even worse.

Eventually, the user - let's call him Dan - will try to shut the computer down, get sick of waiting for the numerous simple disk tasks this entails to conclude, and just turn the darn thing off. And now, the metaphorical plate-juggler will forget about all of those plates that are still in the air, and leave them to crash to the floor.

In my case, this meant various programs lost some of their configuration data. Firefox, for instance, was back in the default toolbar and about:config state, and all of the extensions thought they'd only just been installed. My text editor forgot about the files I used to have open, and Eudora lost a couple of tables-of-contents and rebuilt them with the usual dismal results.

(If you lose the TOC in a mail client like Eudora that uses the simple mailbox format in which the main mailbox file is just a giant slab of text, and the TOC is the separate file that tells the programs where actual e-mails start and end within that text, rebuilding the TOC probably won't go well. It'll give you a separate "e-mail" for every version of a given message that looks as if it might exist. So if you saved an e-mail you were writing five times before you finished it, you'll get five separate versions of that e-mail, each at a different stage of completion. When you "compact" a mailbox, you're getting rid of all that duplicate data.)

Oh, and the file I'd been writing something in for the last hour was now a solid block of null characters. As, delightfully, was its .bak file.

I lost very little actual data, because I make regular backups. (Remember: Data You Have Not Backed Up Is Data You Wouldn't Mind Losing.) And stuff I'd done since the last backup which I wasn't actually working on at when the Copy Of Doom commenced was all fine. Good old PC Inspector even let me recover a bit more data.

Even quite a lot of data loss would have been preferable to what I originally thought was going on, though. It looked as if the boot drive - at least - was failing, leading me to another of my disaster-prompted upgrades. It's about time for a new PC now anyway, but it's ever so much more civilised to upgrade when the old computer's still alive.